The products and services mentioned below were selected independent of sales and advertising. However, Simplemost may receive a small commission from the purchase of any product or service through an affiliate link to the retailer’s website.

Shopping online is super convenient, but it’s also a hit-or-miss proposition. Just because a shirt looks fabulous on the model in the photo doesn’t mean it will do you any favors. The shopping gurus at Google Shopping are aware of this problem. Customers on the internet aren’t exactly shy with their complaints! (Plus, research is kind of Google’s thing.)

According to Lilian RinconGoogle’s senior director of product shopping, 42% of online shoppers don’t feel represented by models in images, while 59% end up unsatisfied with an item they bought online because it didn’t look right on them.

So the company has come up with a solution: On June 14, Google released a new “virtual try-on” feature to its Shopping tab that uses artificial intelligence to predict how an item of clothing will fit on someone who looks like you.

How Google’s New Virtual Try-On Feature Works

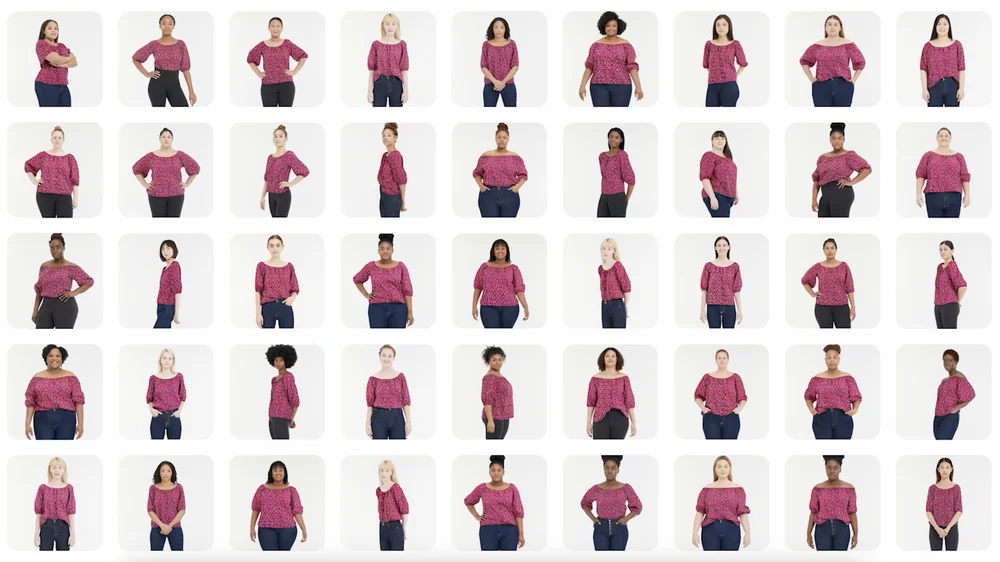

Basically, the site uses image generation technology to superimpose a version of the shirt you’re looking at onto the body type of your choice. You have 40 different bodies to choose from, in a range of skin tonesbody shapes, ethnicities, hair types and sizes that span from XXS to 4XL.

The AI can predict how the shirt might look in real-time, as it drapes, folds, clings and stretches over the body, even creating shadows over the model you choose. This is all based on a diverse set of real models in various poses.

American shoppers can use this feature for tops from the following brands: Anthropologie, Everlane, H&M and Loft. All you need to do is look for the “Try On” badge on select items of clothing and then select the model that looks the most like you.

Google plans to include a lot more clothing brands in the coming months, and the feature will also be available for men’s clothing.

The Technology Behind the Virtual Try-on Feature

If you want to dive into the technological methods that Google developed to make all this work, Ira Kemelmacher-Shlizerman, Google’s senior staff research scientist, Shopping, explains it in Google’s blog post about the new feature in terms of a new “diffusion-based AI models.”

Diffusion is the process of adding extra pixels (“noise”) to an image until it’s unrecognizable. Then, the noise is removed until the original image is completely reconstructed. Once the image is “clean,” the AI can learn exactly what the image is supposed to look like and apply that knowledge to create new variations of it.

Google used a pair of images: one of an item of clothing and one of a person. Each image gets its own neural network, then shares information with one another in a process called “cross-attention.” The result is a photorealistic image of someone wearing the garment.

Then, the AI model is trained through Google’s Shopping Graph, a dataset that contains 35 billion product listings, so it can eventually generate images of millions of shirts for people of every body type from all different angles.

It’s a bit more advanced than what you see in a famous scene in 1995’s “Clueless,” when Cher uses a computer to pick out her outfit for school. Kemelmacher-Shlizerman referenced the scene when discussing the tech.

“We’ve come a long way since Cher’s closet, though,” Kemelmacher-Shlizerman said.

AI Could Be the Wave of the Future in Shopping Circles

Google Shopping has also introduced a new feature that will help online shoppers find alternatives to an article of clothing, like a similar but less expensive alternative, or the same shirt in a different color or pattern.

With the help of machine learning and visual matching algorithms, customers will also be able to shop across different online clothing stores to find an item that best matches their tastes and needs. This feature is also limited to tops right now.

Google already uses a similar AI feature to allow cosmetics shoppers to see what a product looks like on a range of skin tones. According to Archana Kannan, Google’s group product manager, Shopping, this feature was created to help customers understand how makeup from brands like L’Oreal, MAC Cosmetics, Black Opal and Charlotte Tilbury would truly look on them.

It’s not the first company to try this, either. Amazon already has a feature that allows you to virtual try on eyeglasses and shoes, for example. Many other sites have incorporated tech or are looking into additional ways to help reduce the $800 million in returns each year generated by the online garment e-commerce industry.